By Natasha Diederen, Sahra Yusuf, and Olga Guțan

I. Introduction

An algorithm is a finite sequence of well-defined, computer-implementable instructions, typically designed to solve a class of specific problems or to perform a computation. Similarly to how we use our brains to (sometimes wrongly) categorize things in order to make sense of the world, a computer needs an algorithm to make sense of what the user is asking it to do. Since an algorithm is a way of communication between two vastly different entities — a human and a machine — some information gets lost along the way. The process is intellectually intriguing to witness, however, problems can arise when we use algorithms to make decisions that influence the lives of humans and other self-aware living beings.

Algorithms are indispensable for efficiently processing data; therefore they will continue to be part of our programming ecosystem. However, we can (and must) keep some space in our minds for the additional nuances that reality contains. While a programmer may not be able to fully convey these nuances to a computer, we must be aware that no algorithm’s output is final, all-encompassing, and universally true. Furthermore, we must be mindful of the conclusions we draw from the output of algorithms.

II. Algorithms Gone Wrong

Broadly speaking, potential pitfalls for algorithms are manifold. The issues stem from the nature of the algorithms — a communication tool between a human entity and a nonhuman entity (a computer). These problems morph into different real-life issues based on what types of algorithms we use and what we use them for.

Even when playing with algorithms intended to solve toy problems that we already have the answers to, we can notice errors. However, in real life, data is (even) more messy and consequences are far larger. In 2018, Elaine Herzberg was hit and killed by a self-driving taxi. She was jaywalking in the dark with a bicycle by her side and the car alternated between classifying her as a person and a bicycle, thus miscalculating her trajectory and not classifying Elaine as an obstruction. In this case, the safety driver was distracted by a television program, and thus not entirely blameless. However, this serves as an example of how our blind trust in the reliability of algorithms can often result in complacency, with far reaching consequences.

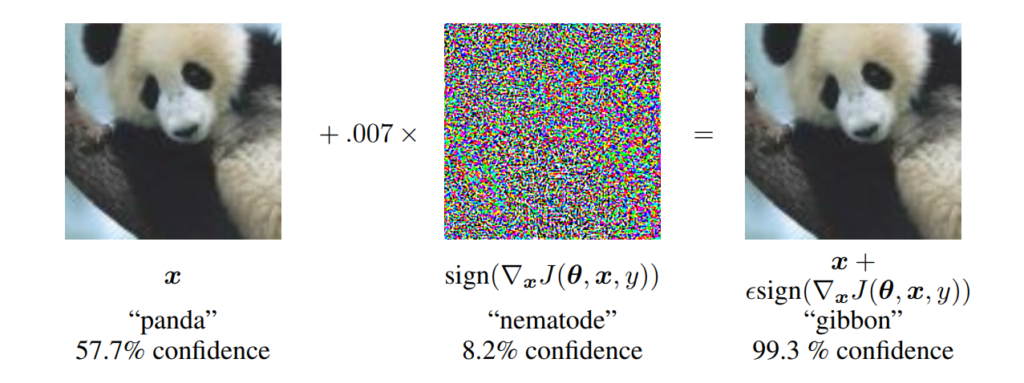

A further example of error in algorithms is adversarial attacks on neural networks. This is when researchers (or hackers) feed a neural network a modified image, where changes made to the image are imperceptible to the human eye. Nevertheless, this can result in incorrect classification by neural networks, with high confidence to boot.

In Figure 1 we see how by adding an imperceptibly small vector whose elements are equal to the sign of the elements of the gradient of the cost function with respect to the input, we can change GoogLeNet’s classification of the image. Here the \(\epsilon\) of 0.007 corresponds to the magnitude of the smallest bit of an 8 bit image encoding after GoogLeNet’s conversion to real numbers.

Although researchers are working on making neural networks more robust to these sorts of attacks, susceptibility to adversarial attacks seems to be an inherent weakness of deep neural networks. This has serious security implications as computer vision increasingly relies on these models for facial recognition, autonomous vehicles, speech recognition and much more.

III. Geometry Processing with Imperfect Data

In geometry processing, there is often a need for refinement of geometric data, especially when the data is taken from “real” contexts. Examples of imperfections in 3D models include gaps, self-intersections, and non-manifold geometry, or geometry which cannot exist in reality (e.g. edges included in more than two faces and disconnected vertices).

One common source of imperfect, “real-life” data is 3D object scanning. The resulting models typically include gaps and large self-intersections as a result of incomplete scanning or other error arising from the scanning method. Despite these significant problems, scanned data is still invaluable for rapidly generating assets for use in game development and other applications. During our time at the Summer Geometry Institute, Dr. Matthias Nießner spoke about 3D scene reconstruction with scanned data. His work demonstrated a method of improving the overall quality of reconstructed scenes using bundle adjustment and other techniques. He also worked on solving the problem of incomplete scanned objects using machine learning. Previously, we have written about possible mistakes arising from the weaknesses of machine learning, but Dr. Nießner’s work demonstrates that machine learning is a valuable tool for refining data and eliminating mistakes as well.

Although error in geometry processing is not as frequently discussed, the implications are just as important as those of mistakes arising from the use of machine learning. This is primarily due to the fact that machine learning and geometry processing are not isolated fields or tools, but are often used together, especially in the sorts of situations we described earlier. By researching and developing new methods of data refinement, we can improve the usability of natural data and increase, for example, the safety of systems which rely on visual data.

IV. Human Error and Bias

The errors we have discussed so far exclude human error and bias, which can aggravate existing inequalities in society. For example, a Fellow one of us worked with during SGI mentioned how he worked on a project which used face tracking to animate digital characters. However, the state-of-the-art trackers only worked on him (a white male) and could not track eye or mouth movement for those in his team of black descent. Additionally, as we heard from Theodore Kim in another brilliant SGI talk, animation is focused on designing white skin and non-type 4 hair, further highlighting the systemic racism present in society.

Moreover, the fact that 94.8% of Automatic Gender Recognition (AGR) papers recognize gender as binary has huge implications for the misgendering of trans people. This could lead to issues with AGR based access control for things like public bathrooms, where transgender people may be excluded from both male and female facilities. The combination of machine and human error is especially dangerous, and it is important to recognize this, so that we can mitigate against the worst harm.

V. Conclusion

Algorithms have become a fundamental part of human existence, however our blind faith that algorithms are always (1) correct and (2) unbiased is deeply flawed. The world is a complicated place, with far too much data for computers to handle, placing a strong reliance on simplification algorithms.

As we have seen from the examples above, these algorithms are far from perfect and can sometimes erase or distort important data. This does not mean we should stop using algorithms entirely. What this does, however, mean is that we must employ a hearty dose of critical thinking and skepticism when analyzing results outputted by an algorithm. We must be especially careful if we use these results for making decisions that would influence the lives of other humans.