In the world of computer vision, Simultaneous Localization and Mapping (SLAM) is a critical technique used to create a map of an environment while simultaneously keeping track of a device’s position within it. Traditional SLAM methods rely heavily on geometric models and point cloud data to represent the environment. However, with the advent of deep learning and neural implicit representations, new approaches are revolutionizing SLAM.

One such approach is NICER-SLAM, which stands for Neural Implicit Scene Encoding for RGB SLAM. NICER-SLAM merges the power of Neural Radiance Fields (NeRFs) and RGB camera inputs to construct accurate 3D maps and deliver more robust tracking performance. This blog will take you through the key concepts, architecture, and applications of NICER-SLAM.

What is NICER-SLAM?

NICER-SLAM is a learning-based approach to RGB SLAM, which bypasses traditional point cloud generation and instead encodes the environment using implicit neural networks. Unlike conventional SLAM systems that rely on a feature-based pipeline, NICER-SLAM optimizes a neural network to represent the scene as a continuous function over space. This implicit representation of the scene significantly enhances the accuracy of mapping and tracking tasks using just RGB data.

Why Neural Implicit Representations?

Neural implicit representations (or NeRFs) have recently gained traction due to their ability to represent 3D scenes in a high-resolution, continuous manner without needing explicit 3D models like meshes or voxels. In the context of SLAM, this capability is highly advantageous because it allows for:

- Compact scene encoding: Neural networks compress the scene data into a small, continuous model.

- Smooth interpolation: Scenes can be smoothly reconstructed at any viewpoint, avoiding the discretization issues that arise in traditional 3D SLAM techniques.

- Less reliance on depth sensors: NICER-SLAM performs SLAM operations with RGB cameras, reducing hardware complexity.

How Does NICER-SLAM Work?

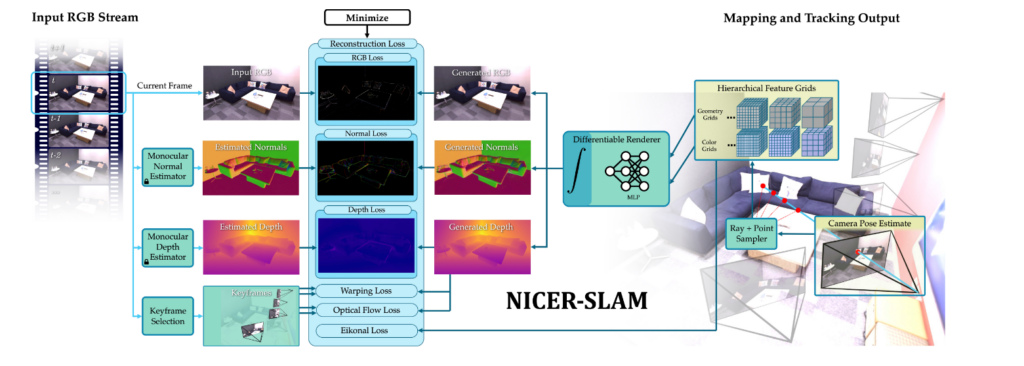

NICER-SLAM consists of a two-stage architecture: scene representation and pose estimation. Below is a breakdown of the key components of its pipeline:

1. Scene Representation via Neural Fields

In NICER-SLAM, the scene is encoded using Neural Radiance Fields (NeRFs), a popular method for implicit scene representation. NeRFs represent scenes as a continuous volumetric field, where every point in space is associated with a radiance value (the color) and density (how much light the point blocks).

- NeRF-based Scene Model: NICER-SLAM trains a neural network to predict the color and density of points in 3D space, given a viewpoint .

- Optimization: The network is optimized by minimizing the photometric error between the actual image captured by the camera and the rendered image generated by the model. This allows for reconstructing a high-fidelity 3D model from RGB data .

2. Pose Estimation and Tracking

To achieve localization, NICER-SLAM tracks the camera’s pose by continuously adjusting the position of the camera with respect to the environment. It employs a learning-based pose estimation network, which uses the encoded scene and the camera images to predict accurate camera poses .

- Pose Optimization: The camera pose is iteratively refined by minimizing the error between the projected 3D model and the observed RGB frames, ensuring precise tracking even in challenging environments .

- Differentiable Rendering: The system uses differentiable rendering to compute the gradients that guide the optimization of the scene representation and pose estimation together .

Architecture Overview

NICER-SLAM architecture

The architecture of NICER-SLAM can be divided into three primary sections:

- Neural Implicit Encoder: The neural network that processes RGB inputs to encode the scene into a continuous neural field.

- NeRF Scene Representation: Neural Radiance Fields that store the scene in a compact and implicit form.

- Pose Estimator: A learning-based network that estimates the camera pose by leveraging both scene geometry and RGB image information .

Rendering Results

Below is an example of the rendering results obtained using NICER-SLAM. These results showcase the system’s ability to create detailed, high-resolution maps of the environment with smooth surfaces and accurate color reproduction.

Rendering result on the self-captured outdoor dataset

Applications of NICER-SLAM

The innovations brought by NICER-SLAM open up possibilities in several fields:

- Autonomous Robotics: Robots can perform high-precision navigation and mapping in unknown environments without relying on depth sensors .

- Augmented Reality (AR): NICER-SLAM can enable smoother and more accurate scene reconstructions for AR applications, improving the visual fidelity and interaction with virtual objects .

- 3D Reconstruction: NICER-SLAM’s ability to create dense and continuous 3D maps makes it a strong candidate for tasks in 3D scanning and modeling .

References:

- Wang, N., et al. (2021). NICER-SLAM: Neural Implicit Scene Encoding for RGB SLAM. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). arXiv preprint.

- Mildenhall, B., et al. (2020). NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. Proceedings of the European Conference on Computer Vision (ECCV). arXiv preprint.

- Zhu, Z., et al. (2021). Learning-Based SLAM: Progress, Applications, and Future Directions. IEEE Robotics and Automation Magazine. arXiv preprint.

- Klein, G., & Murray, D. (2007). Parallel Tracking and Mapping for Small AR Workspaces. IEEE/ACM Transactions on Graphics (TOG). arXiv preprint.