In this two-part post series, Nicolas and I dive deeper into SLAM systems– our project’s focus for the past two weeks. In this part, I introduce and cover the evolution of SLAM systems. In the next part, Nicolas harnesses our interest by discussing the future. By the end of both parts, we should be able to give you an overview of What it Takes to Get a SLAM Dunk.

Collaborators: Nicolas Pigadas

Introduction

Simultaneous Localization and Mapping (SLAM) systems have become a standard in various technological fields, from autonomous robotics to augmented reality. However, in recent years, this technology has found a particularly unique application in medical imaging– in endoscopic videos. But what is SLAM?

SLAM systems were conceptualized in robotics and computer vision for navigation purposes. Before SLAM, the fields employed more elementary methods,

- Localization: the ability of a system (a robot, say) to determine its position within a known environment. This can be done using odometry, landmark-based localization, and dead reckoning.

- Mapping: the process of creating a representation of an environment, typically in the form of a 2D or 3D map. This was done using grid-based and feature-based methods.

You may be thinking, Krishna, you just described SLAM systems, it sounds like. You are right, but the localizing and mapping were separate processes. So a robot would go through the pains of the Heisenberg principle, i.e., the robot would either localize or map– the or is exclusionary.

It was fairly obvious, but still daunting what the next step in research would be. Before we SLAM dunk our basketball, we must do a few lay-ups and free-throw shoots first.

Precursors to SLAM

Here are some inspirations that contributed to the development of SLAM

- Probabilistic robotics: The introduction of probabilistic approaches, such as Bayesian filtering, allowed robots to estimate their position and map the environment with a degree of uncertainty, paving the way for more integrated systems.

- Kalman filtering: a mathematical technique for estimating the state of a dynamic system. It allowed for continuous estimation of a robot’s position and could be invariant to noisy sensor data.

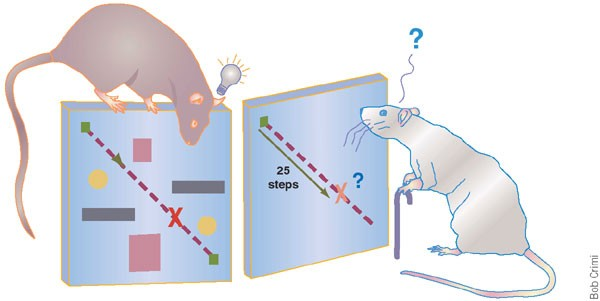

- Cognitive Mapping in Animals: Research in cognitive science and animal navigation provided theoretical inspiration, particularly the idea that animals build mental maps of their environment while simultaneously keeping track of their location.

SLAM Dunk – A Culmination (some real Vince Carter stuff)

Finally, many researchers agreed that the separation of localizing and mapping was ineffective, and great efforts went into their integration. SLAM was developed. The goal was to enable systems to explore and understand an unknown environment autonomously, they needed to localize and map the environment simultaneously, with each task informing and improving the other.

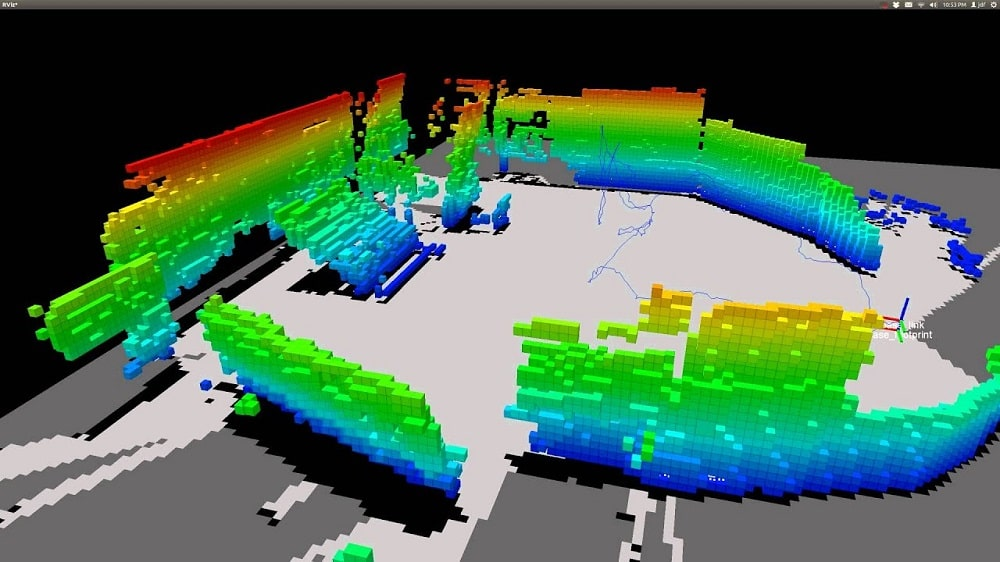

With its unique ability to localize and map, researchers found SLAM’s use in any sensory device. Some of SLAM’s earlier use were sensor-based; so data would be inputted from range finders, sonar, and LIDAR; in the late 80s and early 90s. It is good to note that the algorithms were computationally intensive– and still are.

As technology evolved, a vision-based SLAM emerged. This shift was inspired by the human visual system, which navigates the world primarily through sight, enabling more natural and flexible mapping techniques.

Key Milestones

With the latest iterations of SLAM being exponentially better than the origin, it is important to recognize the journey. Here are notable SLAM systems:

- EKF-SLAM (Extended Kalman Filter SLAM): One of the earliest and most influential SLAM algorithms, EKF-SLAM, laid the foundation for probabilistic approaches to SLAM, allowing for more accurate mapping and localization.

- FastSLAM: Introduced in the early 2000s, FastSLAM utilized particle filters, making it more efficient and scalable. This development was crucial in enabling real-time SLAM applications.

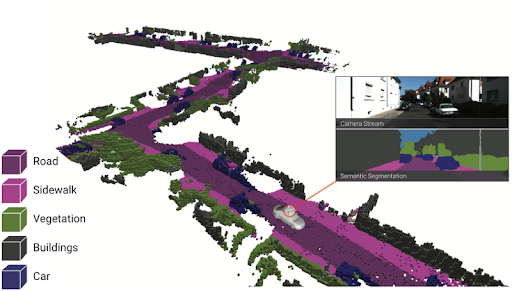

- Visual SLAM: The transition to vision-based SLAM in the mid-2000s opened new possibilities for the technology. Visual SLAM systems, such as PTAM (Parallel Tracking and Mapping), enabled more detailed and accurate mapping using standard cameras, a significant step toward broader applications.

From Robotics to Endoscopy (Medical Vision)

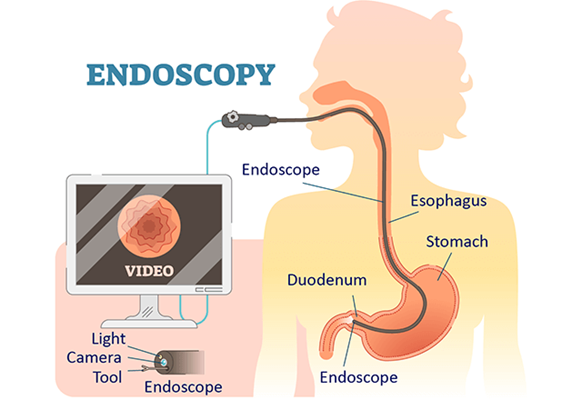

As SLAM technology matured, researchers explored its potential beyond traditional robotics. Medical imaging, particularly endoscopy, presented a fantastic opportunity for SLAM. Endoscopy is a medical procedure involving a flexible tube with a camera to visualize the body’s interior, often within complex and dynamic environments like the gastrointestinal tract.

It is fairly trivial why SLAM could be applied to endoscopic and endoscopy-like procedures to gain insights and make more medically informed decisions. Early work focused on using visual SLAM to navigate the gastrointestinal tract, where the narrow and deformable environment presented significant challenges.

One of the first successful implementations involved using SLAM to reconstruct 3D maps of the colon during colonoscopy procedures. This approach improved navigation accuracy and provided valuable information for diagnosing conditions like polyps or tumors.

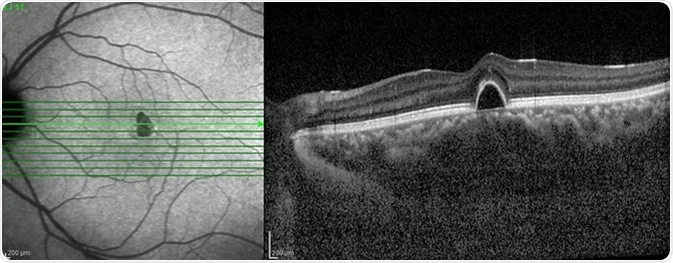

Researchers also explored the integration of SLAM with other technologies, such as optical coherence tomography (OCT) and ultrasound, to enhance the quality of the maps and provide additional layers of information. These efforts laid the groundwork for more advanced SLAM systems capable of handling the complexities of real-time endoscopic navigation.

Endoscopy SLAMs – What Our Group Looked At

As a part of our study, we looked at some presently used and state-of-the-art SLAM systems. Below are the three that various members of our team attempted:

- NICER-SLAM (RGB): a dense RGB SLAM system that simultaneously optimizes for camera poses and a hierarchical neural implicit map representation, which also allows for high-quality novel view synthesis.

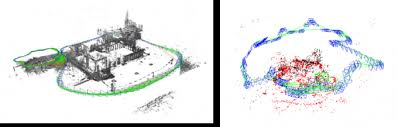

- ORB3-SLAM (RBG): (there is also ORB1 and ORB2) ORB-SLAM3 is the first real-time SLAM library able to perform Visual, Visual-Inertial, and Multi-Map SLAM with monocular, stereo, and RGB-D cameras, using pin-hole and fisheye lens models. In all sensor configurations, ORB-SLAM3 is as robust as the best systems available in the literature and significantly more accurate.

- DROID-SLAM (RBG): a new deep learning-based SLAM system. DROID-SLAM consists of recurrent iterative updates of camera pose and pixel-wise depth through a Dense Bundle Adjustment layer.

Some other SLAM systems that our team would have loved to try our hand at are:

- Gaussian Splatting SLAM: first application of 3D Gaussian Splatting in monocular SLAM, the most fundamental but the hardest setup for Visual SLAM.

- GlORIE-SLAM: Globally Optimized RGB-only Implicit Encoding Point Cloud SLAM. This system uses a deformable point cloud as the scene representation and achieves lower trajectory error and higher rendering accuracy compared to competitive approaches.

More SLAM methods can be found in this survey.

Conclusion

This concludes part 1 of What it Takes to Get a SLAM Dunk. This post should have given you a gentle, but robust-enough introduction to SLAM systems. Vince Carter might even approve.