Authors: Sana Arastehfar, Erik Ekgasit, Berfin Inal, Maria Stuebner

Abstract:

Latent NeRF (Neural Radiance Fields) is a state-of-the-art generative model capable of synthesizing 3D-consistent 2D images from a combination of 3D-sketch and text guides. In this report, we investigate the temporal consistency of Latent NeRF by generating 3D-consistent images with different sketch guides and exploring the interplay between 3D-sketch guidance and text prompts in the generation process; with a broader motivation of using diffusion models in animation and dynamic scene generation. Through our experiments, we discover the importance of incorporating both 3D sketch and text information to achieve accurate and consistent results. Additionally, we propose potential enhancements, including the integration of geometry/shape loss and automatic generation of descriptive text from geometry, to improve the model’s performance.

Introduction:

Neural Radiance Field (NeRF) is a relatively new representation of geometry. They use a neural network to approximate a function F: (x, θ) → (c, σ) that can model the appearance of a single scene. This function takes a sampled input 3D point x and a viewing direction θ derived from a 2D image (with camera information) and returns the color c = (r, g, b) and volume density σ of the shape at that point. This is enough to encode the shape and color of a scene as well as view-dependent lighting effects in the scene with a radiance field.

Traditionally, NeRFs have been trained with sets of images from the real world or images that are rendered using computer graphics software. Recently, image diffusion models such as Stable Diffusion have been able to generate coherent images from just text prompts. Combining diffusion models and NeRFs has led to Latent NeRF, a cutting-edge generative model that uses an image diffusion model to train NeRFs using just a text prompt and an optional guiding 3D shape.

We investigate the use of latent-nerf to create sequential images and animation. One major challenge in doing so is temporal consistency. It is currently challenging to ensure that diffusion models recreate the same object/character between runs. This week, we attempt to achieve temporal consistency of the model’s output and the influence of combining different input modalities.

Methodology:

We conducted a series of experiments to evaluate the temporal consistency of the Latent NeRF model and explore the interaction between sketches and text prompts during image synthesis.

Temporal Consistency Assessment:

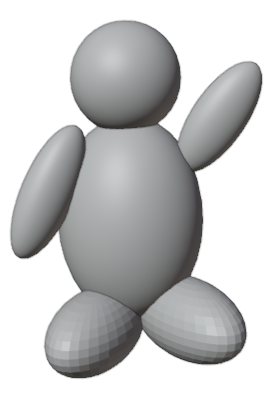

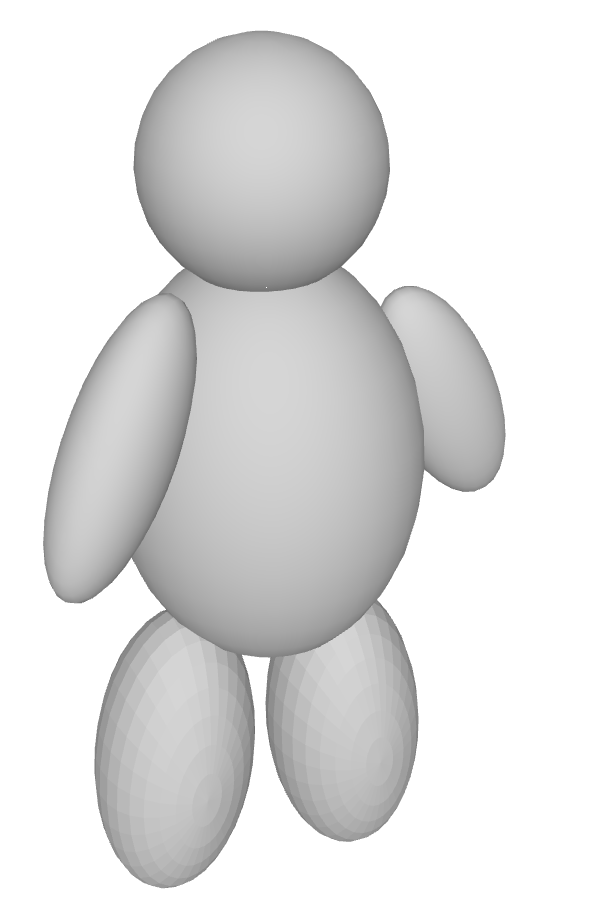

We first decided to test consistency between two poses under the most naive approach to get a baseline for consistency in latent-nerf. We started by using sketch shapes to guide the shape of the NeRFs. Each sketch shape is a collection of simple triangle meshes arranged in roughly the same shape as the desired output. Our Blender-master of the team, Erik, created sketch shapes of a teddy bear in different poses and configurations to guide the NeRF generation. Figure 1 depicts the original sketch-shape of a teddy bear with its left arm raised, and Figure 2 shows the same teddy bear sketch with both arms down. Given the same text prompt, would the NeRFs generated from these sketch shapes look like the same object in different poses? According to the results in Figure 4 and Figure 5, the answer is no. The models have different colors, making them unsuitable to use as frames of animation.

Cross-Modal Interaction:

To understand the interplay between sketches and text prompts, we augmented the base sketch with a sword (Figure 3). We experimented with two different text prompts: “a lego man” (since this is the convention in previous papers to call it a “lego man” instead of a “lego human”) and “a lego man holding a sword”. Figure 6 illustrates the output when using the “a lego man” text prompt, which resulted in only the phantom of the sword being generated. However, when using the “a lego man holding a sword” text prompt, the model successfully generated a visible sword (Figure 7). The presence of both image and text prompts is crucial for generating visible new objects, suggesting the importance of cross-modal interaction.

Additionally, we wanted to assess the level of support required from sketch-guidance while using text-guidance. To test this, we generated various lego humans based on specific instructions, such as ‘a lego man with right arm up’, ‘a lego man with left arm up’, while keeping the sketch guidance consistent with the same teddy sketch in Figure 1. As seen in Figures 8 and 9, despite the text prompts requesting opposite actions, the outputs are quite similar to each other, suggesting that text-guidance itself is not sufficient to generate the desired output.

Discussion:

Our observations indicate that Latent NeRF’s temporal consistency can be improved by incorporating additional constraints and interactions between different input modalities. This suggests that using a long description of the desired character could enforce temporal consistency between poses.

Ideas:

Building on our observations and discussions, we have some ideas as to how to improve temporal consistency in Latent-NeRF. We could integrate a geometry/shape loss to enhance the model’s ability to maintain consistency between generated images. Additionally, we could develop a mechanism to automatically extract descriptive word descriptors from geometry to use as complementary text prompts. Finally, we could use Stable Diffusion concepts to guide consistency.

Potential Experiments:

Moving forward, we would like to explore the consistency in generating objects with similar geometry (e.g., different keywords as, sword, stick, etc. with the same sketch). To that end, we plan to repeat the same experiment, but with different combinations of geometry and text. Additionally, we would like to investigate the model’s performance when utilizing text prompts unrelated to the geometry, such as “stick” or “apple,” (when the sketch, in fact, displays a sword) to evaluate the robustness of cross-modal interactions. Lastly, we think it would be interesting to find/create a Stable Diffusion concept and apply it to the generation of two different poses.

Conclusion:

Our exploration of Latent NeRF’s temporal consistency and cross-modal interactions highlights the importance of combining sketch and text guides to achieve consistent and accurate 3D image synthesis. By addressing the observed issues and implementing potential enhancements, the model can be further refined to generate even more realistic and consistent images across different inputs. Eventually, this will help animators, modelers, and content creators to easily generate dynamic NeRFs with articulated characters and scenes.

Technical Journey:

Neural networks are renowned for their substantial computational demands, leading to extended training and inference times. Despite notable advancements in the realm of NeRFs research, which have contributed to improved training and inference speeds, these models continue to exhibit high memory requirements. Similarly, diffusion models also exhibit a pronounced appetite for memory resources. Consequently, the execution of Latent-NeRF necessitates access to machines equipped with GPUs boasting a minimum of 12 GB of VRAM.

The training of a single NeRF entails a substantial time investment, ranging from 30 minutes when using NVIDIA RX 3090 to 3 hours using Google Colab. Moreover, the implementation of latent NeRF entailed the integration of numerous dependencies, a process that demanded considerable troubleshooting efforts to ensure proper installation. Given the convergence of the two distinct areas of graphics research, namely NeRFs and Diffusion models, encountering technical challenges during dependency management was a foreseeable aspect of the endeavor. Such technical trouble shooting constitutes an inevitable and crucial facet of the overall research process.

Fortunately, the mentors of this project Sainan Liu, Ilke Demir and Olga Guțan, provided valuable guidance in navigating these technical complexities, which significantly expedited the resolution of issues and allowed us to focus more efficiently on the core aspects of our project.

Papers:

For temporal consistency with better texture/geometry details we explored: D-nerf, EditableNeRF, Tetra NeRF, NeRFEditing, One-2-3-45. For gometric manipulation for temporal consistent 3D animation generation with text/image guidance, SKED and DASR were reviewed.

One reply on “Exploring Temporal Consistency and Cross-Modal Interaction of Latent NeRF”

[…] is a follow up to a previous blogpost. We recommend that you read the previous post before this […]