Fellows: Matheus Araujo, Aditya Abhyankar, Sahana Kargi, Van Le, Maria Stuebner

Mentor: Martin Skrodzki

Introduction

Hyperbolic geometry has recently gained popularity as a promising model to represent non-Euclidean data, with applications ranging from natural language processing to computer vision. It also can be useful in geometry processing to verify discrete conformal equivalence of different triangulations of a surface. One important advantage of hyperbolic geometry is its capacity to represent and visualize hierarchical structures in a more natural way than Euclidean geometry. However, data visualization algorithms, such as t-distributed stochastic neighbor embedding (t-SNE), were more extensively explored in the Euclidean space than in the hyperbolic space. Specifically, spatial data structures can be leveraged to accelerate the approximation of nearest neighbors and provide significant improvements in performance. In this project, mentored by Professor Martin Skrodzi, we investigate different implementations and strategies of spatial acceleration data structures adapted for hyperbolic geometry, such as polar quadtrees, to improve the performance of nearest neighbors computation in the hyperbolic space.

Hyperbolic Geometry

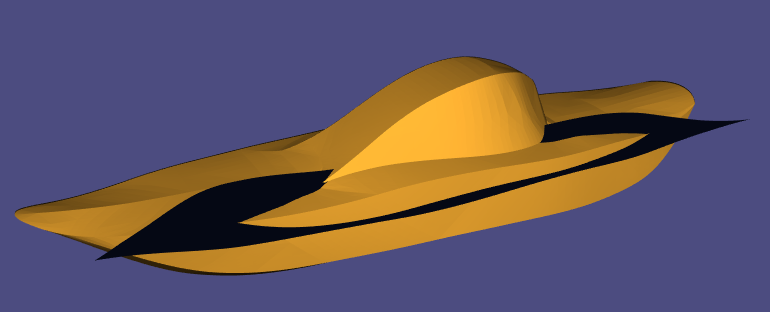

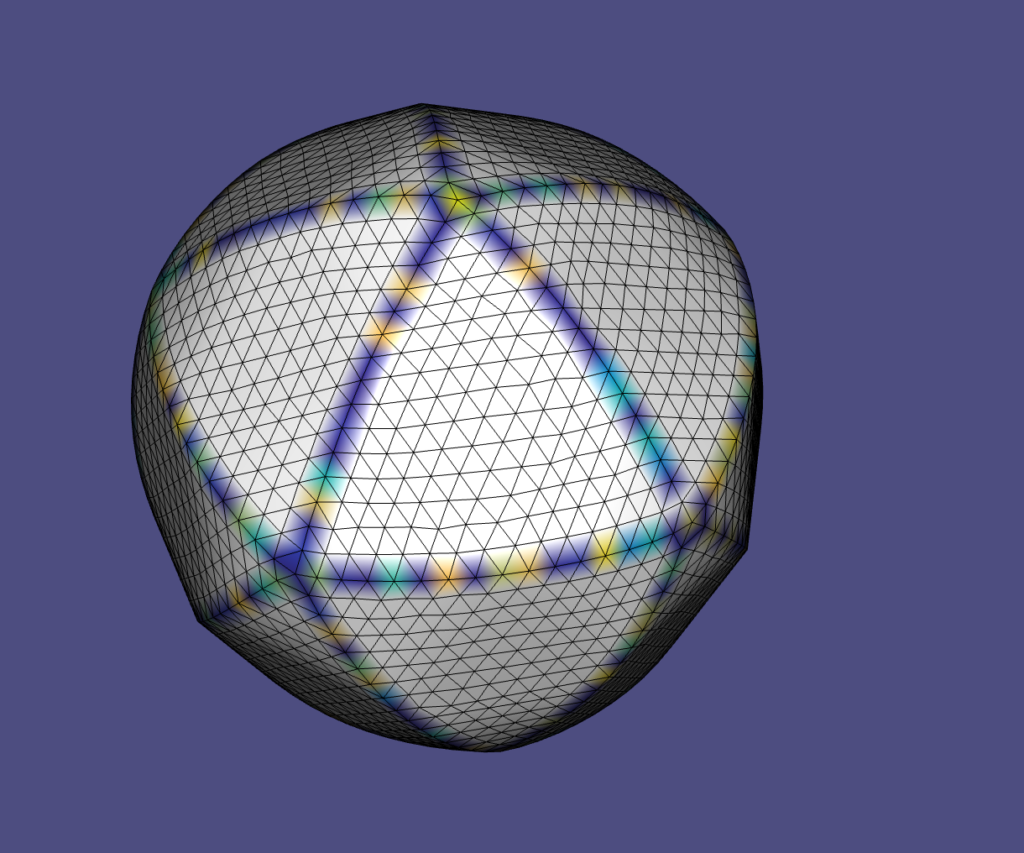

Hyperbolic geometry accepts four out of five of Euclid’s postulates of geometry. An interpretation of Euclid’s fifth postulate is known as the “Playfair’s Axiom,” which states that when given a line and a point not on it, at most one line parallel to the given line can be drawn through the point. Hyperbolic geometry accepts an altered version of this axiom, which allows for an infinite number of lines parallel to the given line and through the given point. There’s no easy way to interpret this geometry in Euclidean space. Every point has constant negative Gaussian curvature equal to \(-1\). One visualization attempt is the pseudosphere, which is the shape obtained by revolving the tractrix curve about its asymptote. But this shape has singularities. Various attempts use a type of knitting technique called crocheting which results in fractal-likes shapes similar to coral reefs, but these only have finite patches with constant Gaussian curvature. Locally, we can intuit the hyperbolic plane to be a saddle surface like a Pringle, but this is just a very local approximation. There are many other models which allow us to make sense of hyperbolic geometry using projections. The one relevant to this project is the Poincaré disk model, which is obtained by perspectively projecting the hyperboloid of one sheet onto the unit disk in \(\mathbb{R}^2\).

t-SNE Algorithm

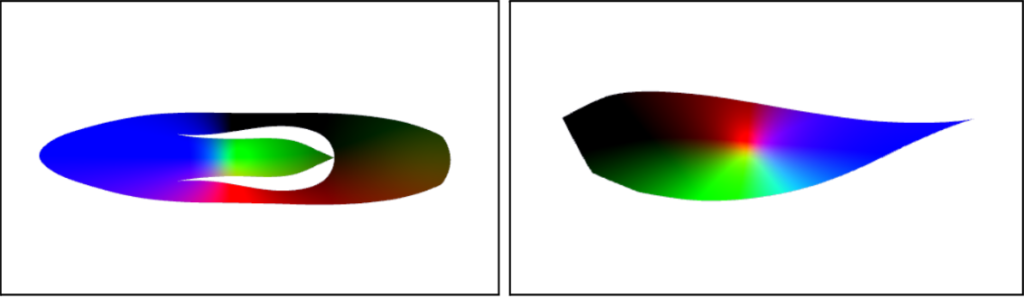

An interesting application of hyperbolic space is hierarchical data visualization, such as single-cell clustering or that of the semantic meaning of an English word vocabulary. In the Poincaré disk model, space grows exponentially as we stray further from the origin, so we can summarize a lot more data than the polynomially area-growing Euclidean space. Another intersecting property of the Poincaré disk model of hyperbolic space, as we stray further from the origin, is that the hyperbolic distance \(d(x,y)\) between points \(x,y\) approaches \(d(x,0)+d(y,0)\). This is extremely useful for visualizing tree-like data, where the closest path between two child nodes is through their parent. One general challenge in data visualization is that each point lies in very high dimensional space. A simple projection down to a lower dimension ruins clustering and spatial relationships between the data. The t-SNE algorithm is an iterative gradient-based procedure that recovers these relationships after an initial projection like that obtained via running a principal component analysis (PCA). In each iteration, the t-SNE algorithm computes an update on a per-data-point basis as a sum of “attractive forces” pulling towards spatially close points and a sum of “repulsive forces” pushing away from spatial distant points. For each data point, the algorithm computes a similarity score for every other data point in both the high and low dimensional spaces and computes the attractive and repulsive forces from these scores. If the low dimensional space is the 2D Poincaré disk, the distance used for computing the similarity scores is the hyperbolic distance:

\(d(\mathbf{u}, \mathbf{v}) = \cosh^{-1} \left(1 + 2 \cdot \frac{|| \mathbf{u} – \mathbf{v} ||^{2}}{(1 – || \mathbf{u} ||^{2})(1 – || \mathbf{v} ||^{2})} \right)\).

Quadtrees

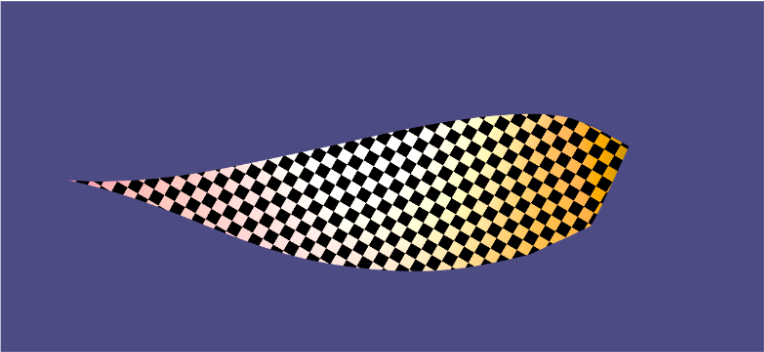

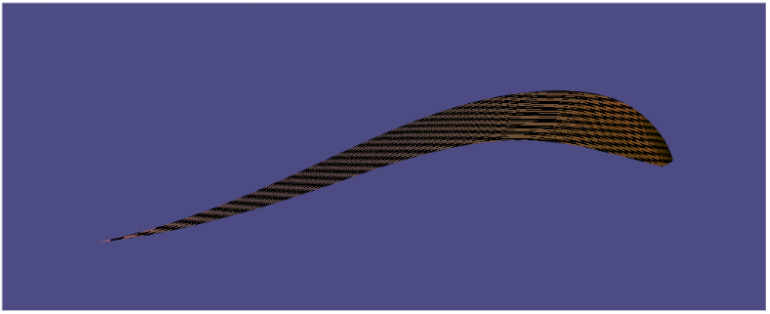

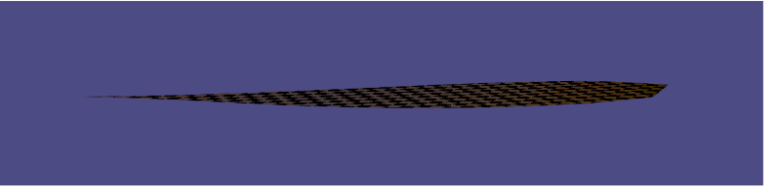

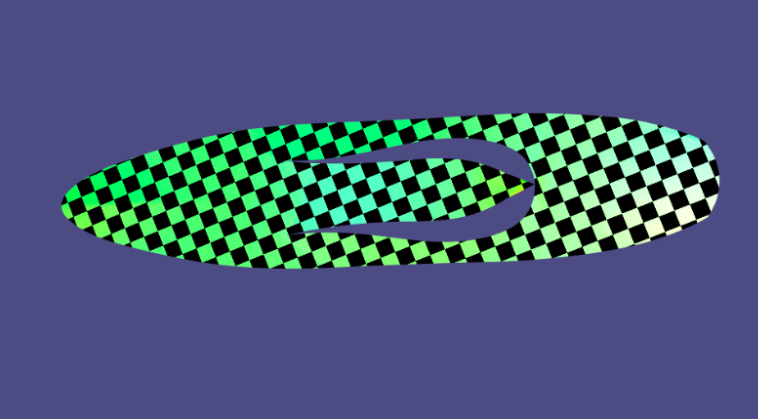

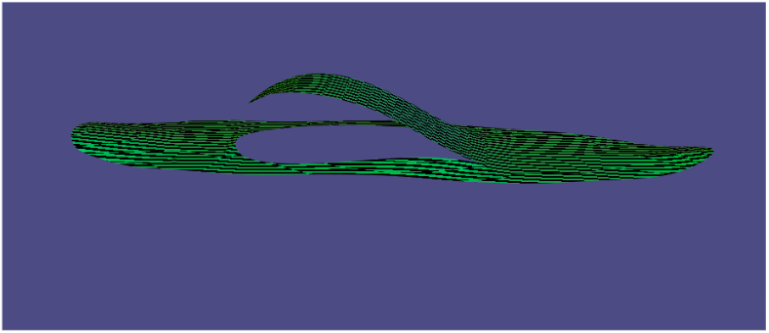

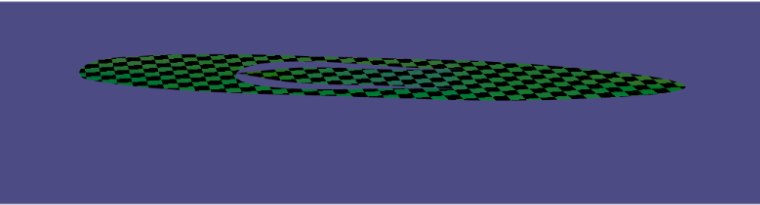

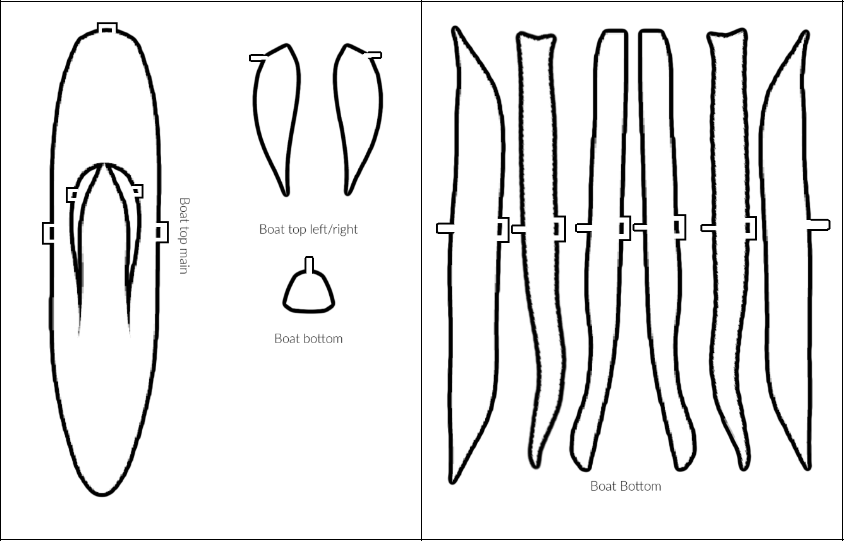

The main bottleneck of the t-SNE algorithm happens to be the computation of repulsive forces. This normally requires an \(O(N^2)\) time complexity per iteration, which can be heavily optimized by using spatial data structures like a quadtree. Each node represents a polar rectangle on the Poincare disk (with a minimum and maximum radius and angle) and is either a leaf or has four sub-rectangles as children. Building the tree takes roughly linear time, as we add all the data points into the tree one by one, incrementally adding more and more nodes as needed, so that just one (or extremely few) points belong to each leaf node. We experimented with various splitting techniques for the generation of the four children nodes from a parent: (1) Area-based splitting, which involves computing the midpoint of the parent rectangle via

\(\text{mid}_r = \cosh^{-1}\left(\frac{\cosh(\alpha\ \text{max}_{r}) + \cosh(\alpha\ \text{min}_r)}{2}\right)\cdot\frac1\alpha\),

and

\(\text{mid}_\theta = \frac{\max_\theta + \min_\theta}{2}\),

which is the hyperbolic average of the min and max radii and a simple average of the min and max angles, and (2) Length-based splitting, in which the midpoint is computed as

\(\text{mid}_r = \frac{\min_r + \max_r}{2}\),

and

\(\text{mid}_\theta = \frac{\min_\theta + \max_\theta}{2}\),

which is just half the radius and angle of the parent. We perform the four-way split using this midpoint and the four corners of the parent rectangle. Once the tree is built, at each iterative gradient update, for each point, we approximate the repulsive forces by traversing the tree via depth-first-search, and if the Einstein midpoint,

\(m_i(\{\alpha_{ij}\}_j, \{\mathbf{v}{ij}\}_j) = \sum_j \left[\frac{\alpha{ij}\gamma(\mathbf{v}{ij})}{\sum_l \alpha{il}\gamma(\mathbf{v}{il})} \right]\mathbf{v}{ij}\),

of the points belonging to the rectangle at a given level in the search is sufficiently far away from the point, we simply use the distance to the Einstein midpoint itself in the calculation of the repulsive similarity score. Intuitively, this is a good approximation, as points far away do not have a large impact on the update anyway, so grouping together many far-away points estimate their collective impact on the update in one fell swoop.

Results

Conclusion and Future Work

Acknowledgments

We would like to express our sincere gratitude to Dr. Martin Skrodzki for his guidance and encouragement throughout this project.

Link to Repo

References

[1] Mark Gillespie, Boris Springborn, and Keenan Crane. “Discrete Conformal Equivalence of Polyhedral Surfaces”. In: ACM Trans. Graph. 40.4 (2021).

[2] Yunhui Guo et al. “Clipped hyperbolic classifiers are super-hyperbolic classifiers”. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022, pp. 11–20.

[3] Shimizu Ryohei, Mukuta YUSUKE, and Harada Tatsuya. “Hyperbolic neural networks++”. In: International Conference on Learning Representations. Vol. 3. 2020.