After six weeks of intensive research and tutorials on applied geometry, we finally are ready to wrap up SGI 2021 and send our Fellows back to their home institutions. Directing this program has been one of the most rewarding experiences of my career, and it has been a pleasure seeing our students advance as scientists, mathematicians, and supportive community members.

SGI’s success is entirely thanks to a huge team of volunteers whose time and energy made the program possible.

Below, I acknowledge the many colleagues who have participated in the planning, leadership, and day-to-day activities of SGI 2021. The sheer length of this list is an inspiring reflection of the supportive community we enjoy in geometry research.

To start, SGI 2021 was only possible with the support of our generous sponsors, whose donations allowed us to offer a stipend to each SGI Fellow commensurate with a summer internship:

- Epic Games, Inc.

- Google Research ExploreCSR

- The MathWorks, Inc.

- Adobe Research

- US Army Research Lab, Mathematical Sciences Branch

- MachineLearningApplications@CSAIL

- Mosek ApS

- National Science Foundation

SGI was organized over the previous year with guidance from colleagues at a variety of institutions worldwide. A Steering Committee provided opinions and advice throughout the planning process:

- Prof. Mikhail Bessmeltsev, Université de Montréal

- Prof. Edward Chien, Boston University

- Prof. Keenan Crane, Carnegie Mellon University

- Dr. Ilke Demir, Intel Corporation

- Prof. Alec Jacobson, University of Toronto

- Prof. Misha Kazhdan, Johns Hopkins University

- Prof. Kathryn Leonard, Occidental College

- Prof. Daniele Panozzo, New York University

- Prof. Adriana Schulz, University of Washington

- Prof. Alla Sheffer, University of British Columbia

- Prof. Amir Vaxman, Utrecht University

- Prof. Etienne Vouga, University of Texas at Austin

Within MIT, several faculty and staff members contributed substantial effort to make the program a success. My team in the Geometric Data Processing (GDP) Group provided advice and volunteer support, from feedback on how to structure the program to hand-packing 72 boxes to ship to our Fellows and volunteers; GDP admin Mieke Moran organized payments and many other key aspects that made the program run smoothly. Our EECS Department Chair Prof. Asu Ozdaglar, AI+D Chair Prof. Antonio Torralba, and CSAIL Director Prof. Daniela Rus advocated for the program and provided support and encouragement as SGI obtained final approval within the MIT administration. CSAIL Assistant Director Carmen Finn provided critical last-minute help to make sure our Fellows were paid on time. Prof. Frédo Durand provided much-needed advice—and allowed me to vent—at several junctures.

SGI 2021 received far more applications than anticipated, and our final cadre of 34 Fellows and 29 additional tutorial week participants was painfully difficult to select. Our admissions committee carefully read all the applications:

- Prof. Mikhail Bessmeltsev, Université de Montréal

- Prof. Alec Jacobson, University of Toronto

- Lingxiao Li, MIT

- Silvia Sellán, University of Toronto

- Prof. Alla Sheffer, University of British Columbia

- Dmitriy Smirnov, MIT

- Dr. Oded Stein, MIT

- Prof. Amir Vaxman, Utrecht University

- Nicholas Vining, University of British Columbia

- Paul Zhang, MIT

The first week of SGI centered around five days of tutorials to get our Fellows up to speed on geometry processing research. Each tutorial day was organized by a different volunteer, who took charge of the content for the entire day and generated valuable course materials we can reuse in the future:

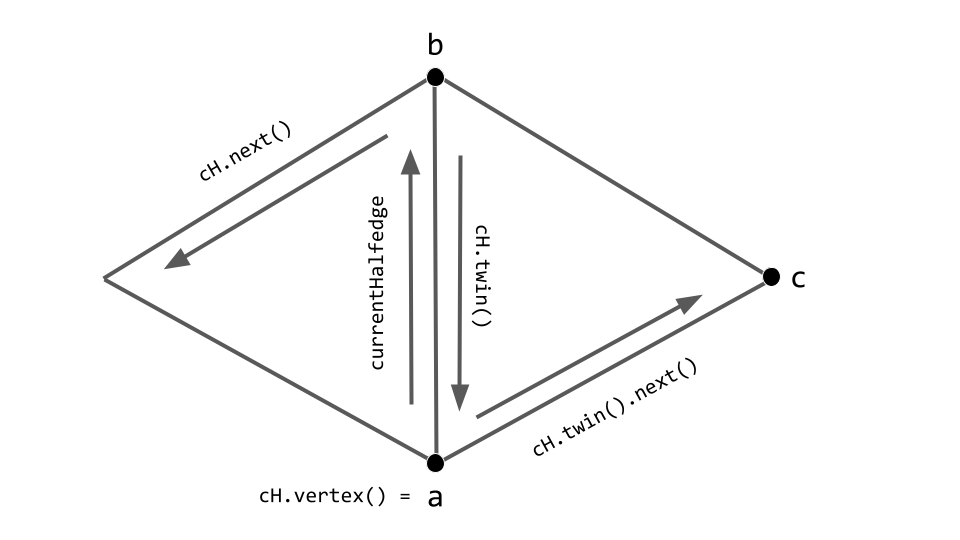

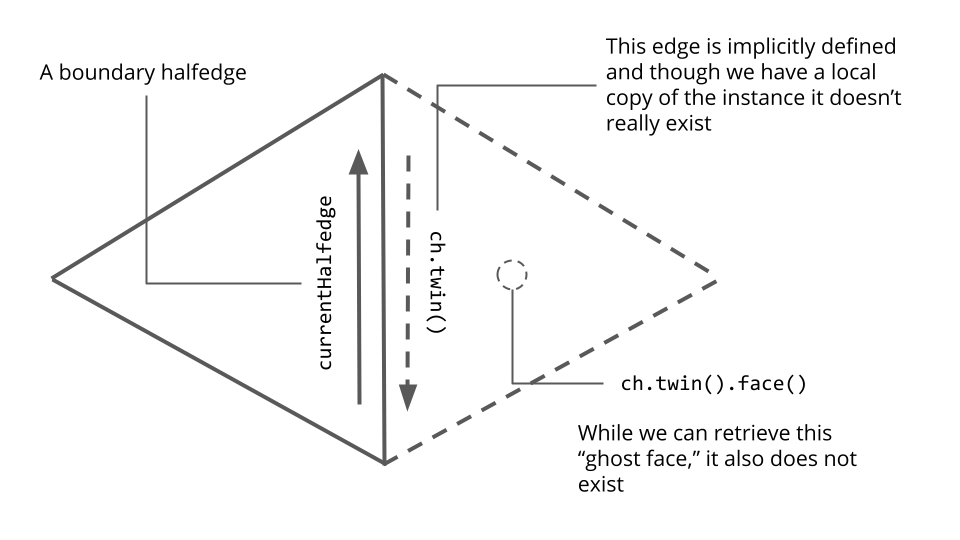

- Day 1: Dr. Oded Stein (MIT), basic techniques in geometry processing

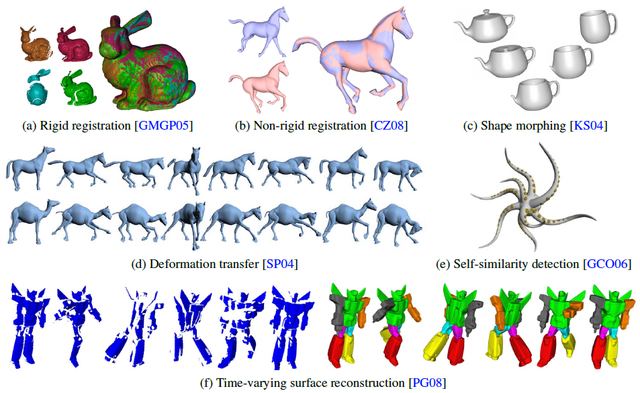

- Day 2: Hsueh-Ti (Derek) Liu (University of Toronto) and Jiayi Eris Zhang (University of Toronto and Stanford), shape deformation

- Day 3: Silvia Sellán (University of Toronto), shape representations

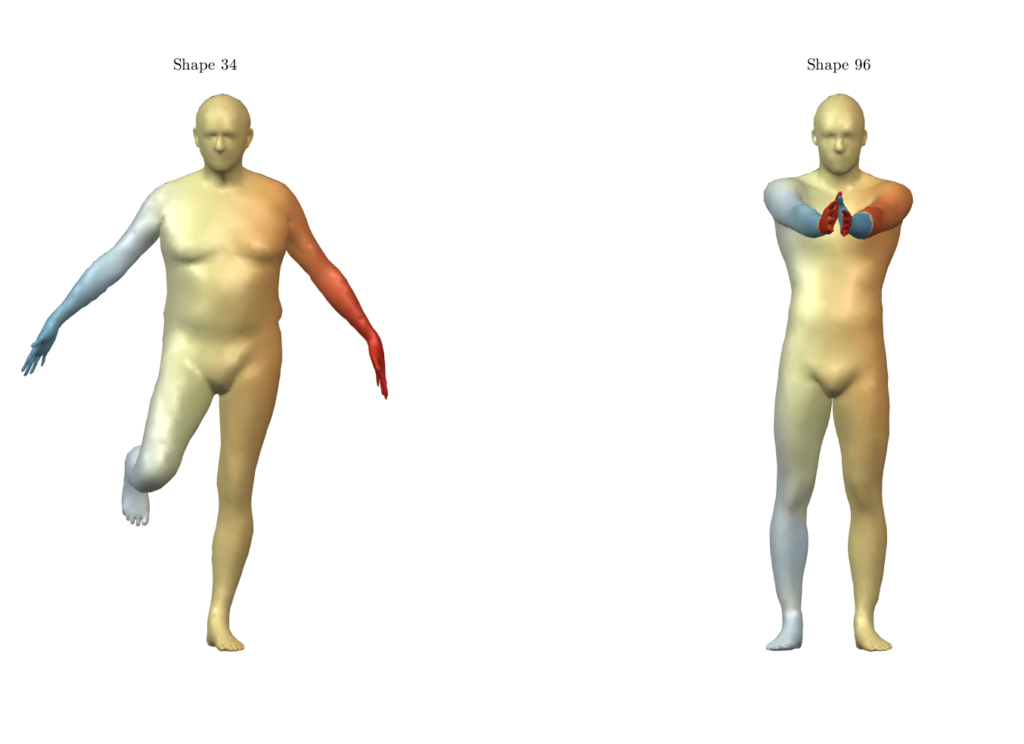

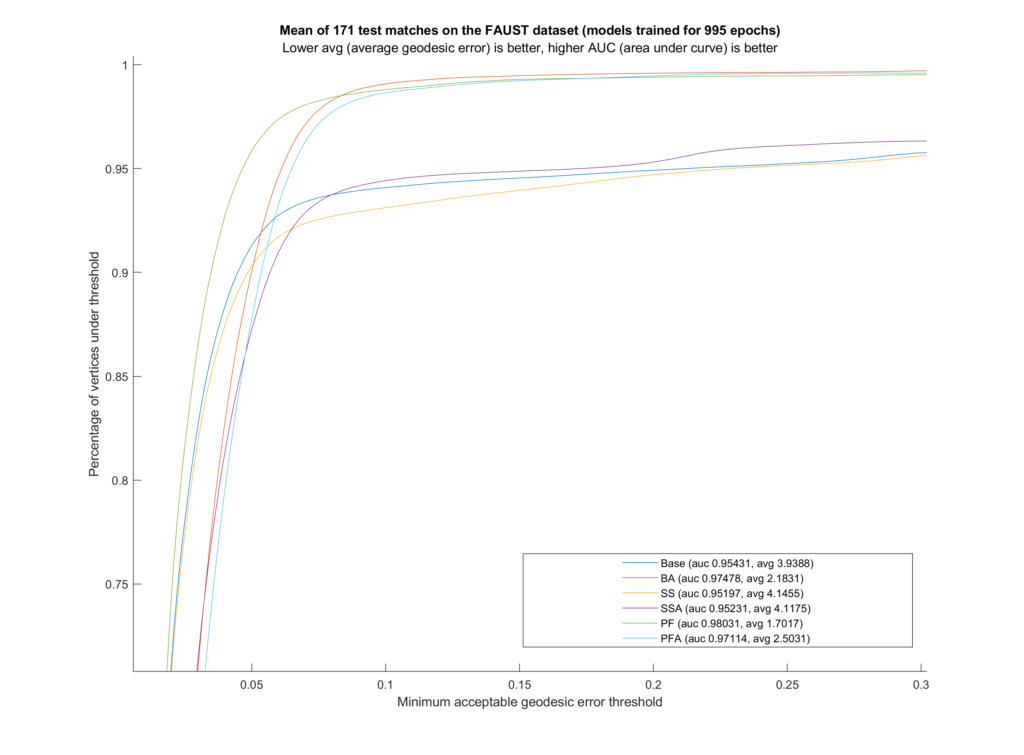

- Day 4: Michal Edelstein (Technion) and Abhishek Sharma (École Polytechnique), shape correspondence

- Day 5: Prof. Amir Vaxman (Utrecht University), directional fields

The remaining five weeks of SGI included a host of 1-3 week research projects, each involving experienced mentors working closely with SGI Fellow. Our full list of projects and project mentors is as follows:

- Dr. Itzik Ben-Shabat: Self-supervised normal estimation using shape implicit neural representation (August 16-August 27)

- Prof. Mikhail Bessmeltsev and Prof. Ed Chien: Singularity-Free Frame Field Design for Line Drawing Vectorization (July 26-August 6)

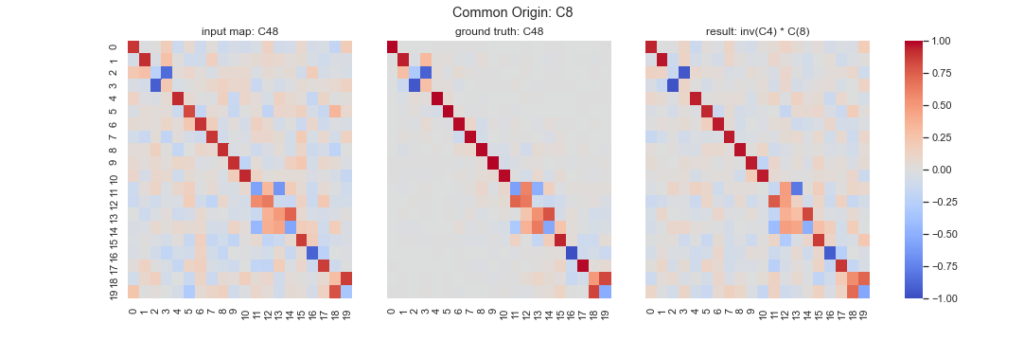

- Dr. Tolga Birdal and Prof. Nina Miolane: Uncertainty Aware 3D Multi-Way Matching via Soft Functional Maps (July 26-August 6)

- Prof. David Bommes and Dr. Pierre-Alexandre Beaufort: Quadrilateral and hexahedral mesh optimization with locally injective maps (July 26-August 6)

- Prof. Marcel Campen: Improved 2D Higher-Order Meshing (July 26-July 30)

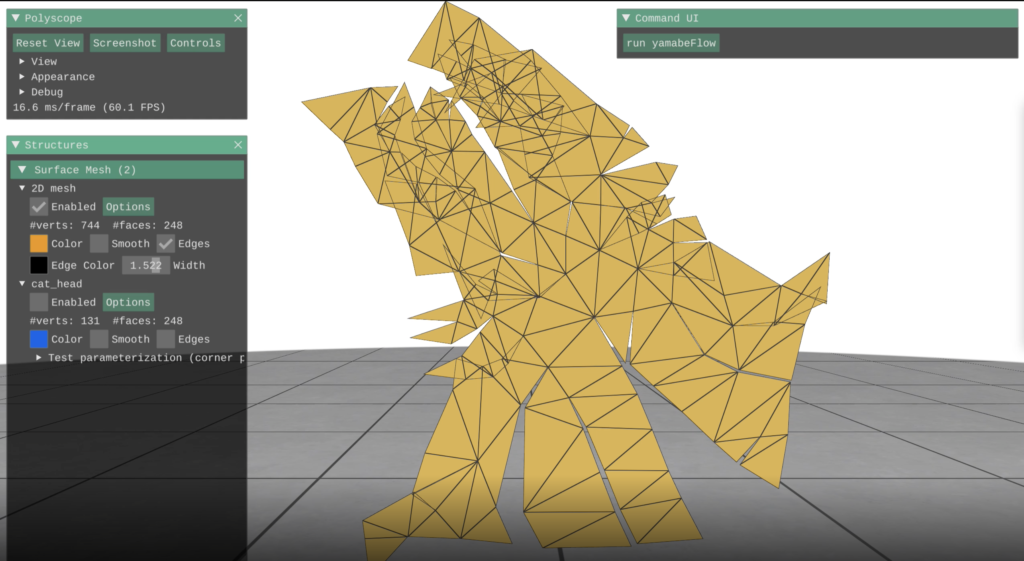

- Prof. Keenan Crane: Surface Parameterization via Intrinsic Triangulations (August 9-August 27)

- Dr. Matheus Gadelha: Learning Classifiers of Parametric Implicit Functions (August 16-August 27)

- Prof. Lin Gao and Jie Yang: Unsupervised partial symmetry detection for 3D models with geometric deep learning (August 16-August 27)

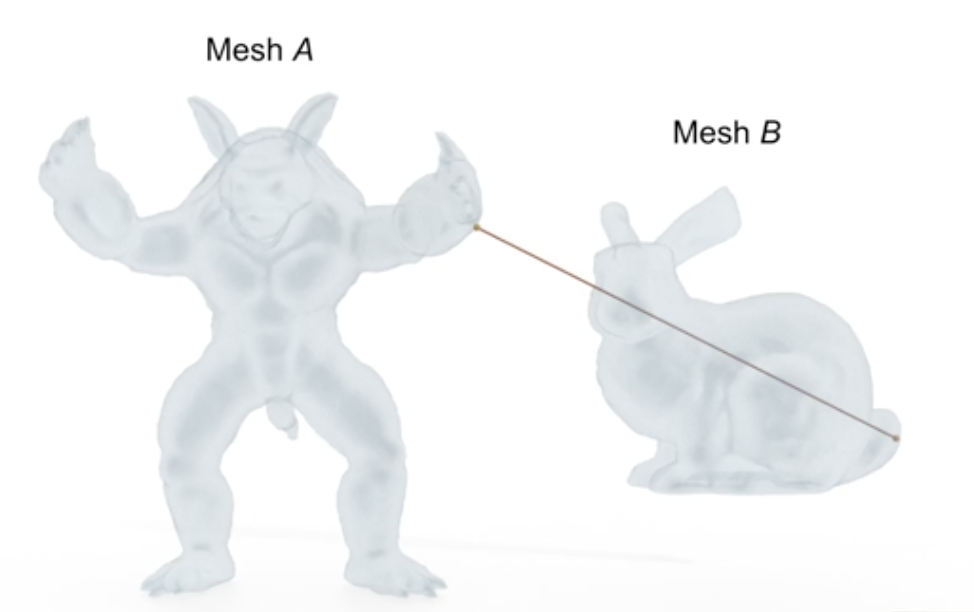

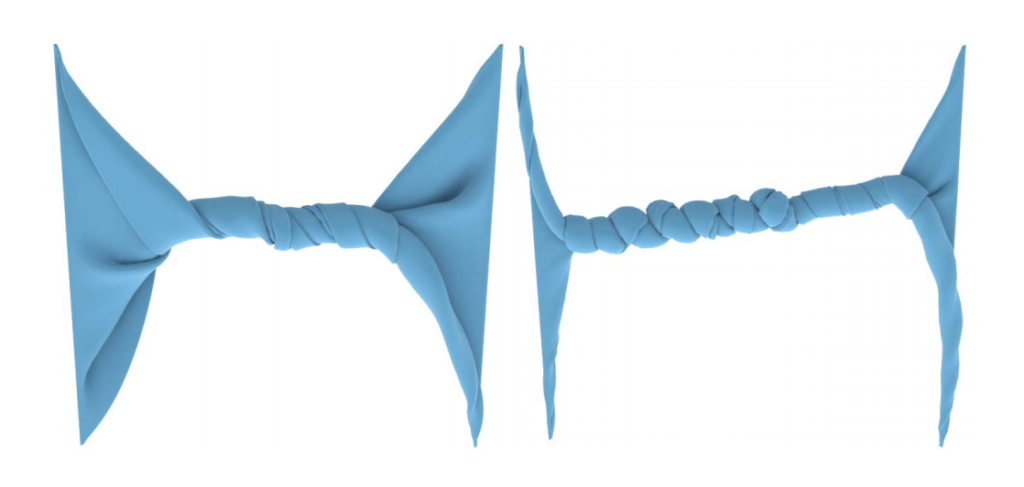

- Christian Hafner and Prof. Bernd Bickel: Joints for Elastic Strips (August 9-August 13)

- Yijiang Huang and Prof. Caitlin Mueller: Design optimization via shape morphing (August 16-August 27)

- Dr. Xiangru Huang: Moving Object Detection from consecutive LiDAR Point Clouds (August 23-August 27)

- Prof. Michael Kazhdan: Multigrid on meshes (July 26-July 30)

- Prof. Paul Kry and Alexander Mercier-Aubin: Incompressible flow on meshes (July 26-August 6)

- Prof. Kathryn Leonard: 2D shape complexity (July 26-July 30)

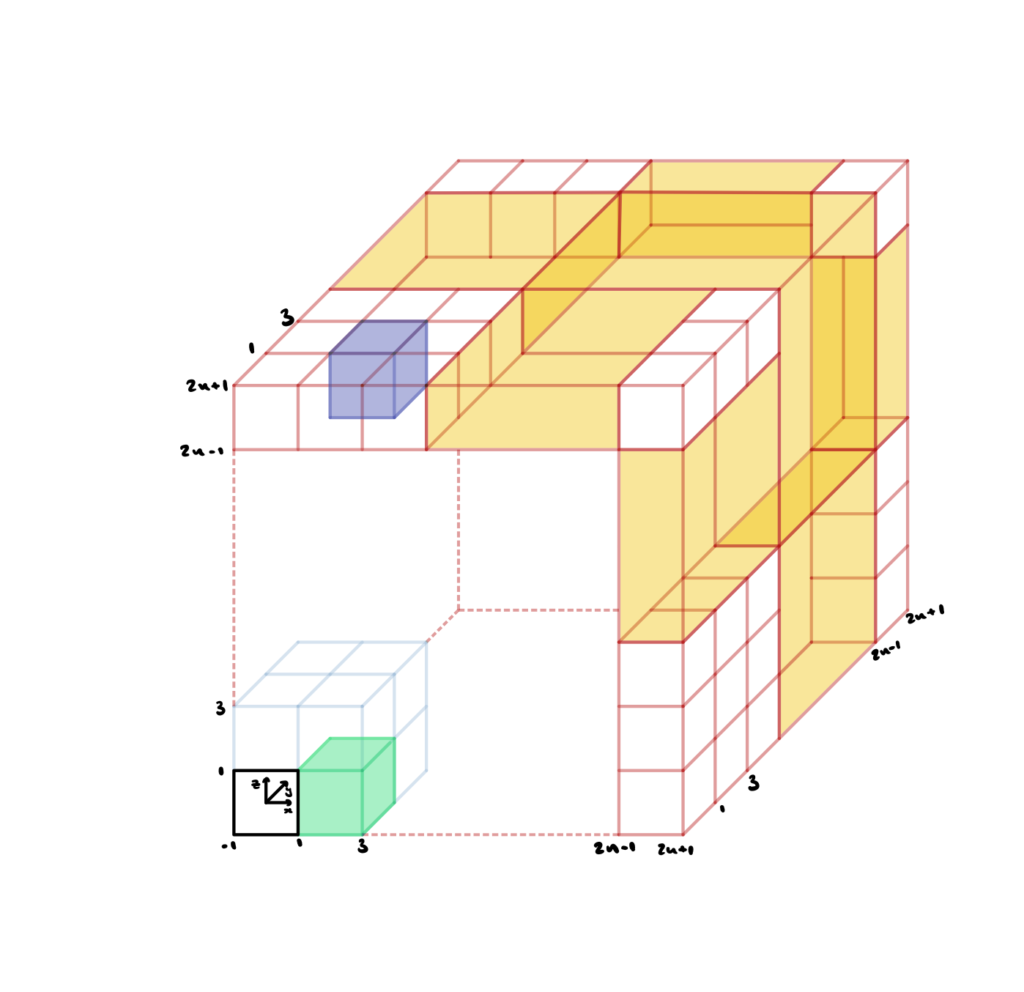

- Prof. David Levin: Optimal Interlocking Parts via Implicit Shape Optimizations (July 26-August 6)

- Angran Li, Kuanren Qian, and Prof. Yongjie Jessica Zhang: Geometric Modeling for Isogeometric Analysis with Engineering Applications (August 2-August 6)

- David Palmer: Bayesian Rotation Synchronization (August 2-August 13); Planar-faced and other specialized hexahedral meshes (August 16-August 27)

- Prof. Jorg Peters (The beauty of) Semi-structured splines (August 9-August 13)

- Alison Pouplin and Dimitris Kalatzis: Learning projection of hierarchical data with a variational auto-encoder onto the Klein disk (July 26-August 6)

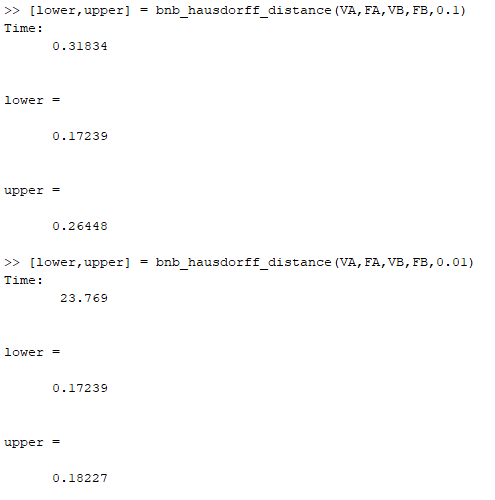

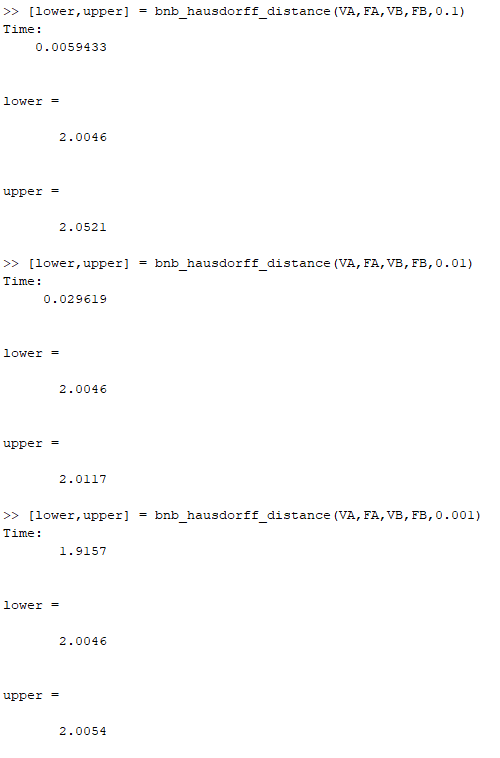

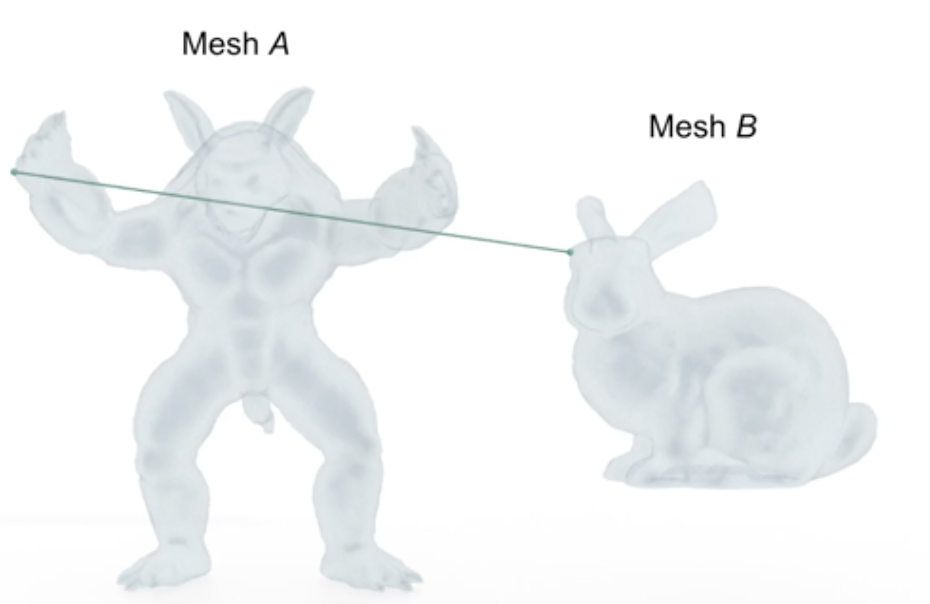

- Prof. Leonardo Sacht: Robust computation of the Hausdorff distance between triangle meshes (August 9-August 20)

- Prof. Yusuf Sahillioglu: Cut optimization for parameterization (August 2-August 13)

- Josua Sassen and Prof. Martin Rumpf: Mesh simplification driven by shell elasticity (August 9-August 20)

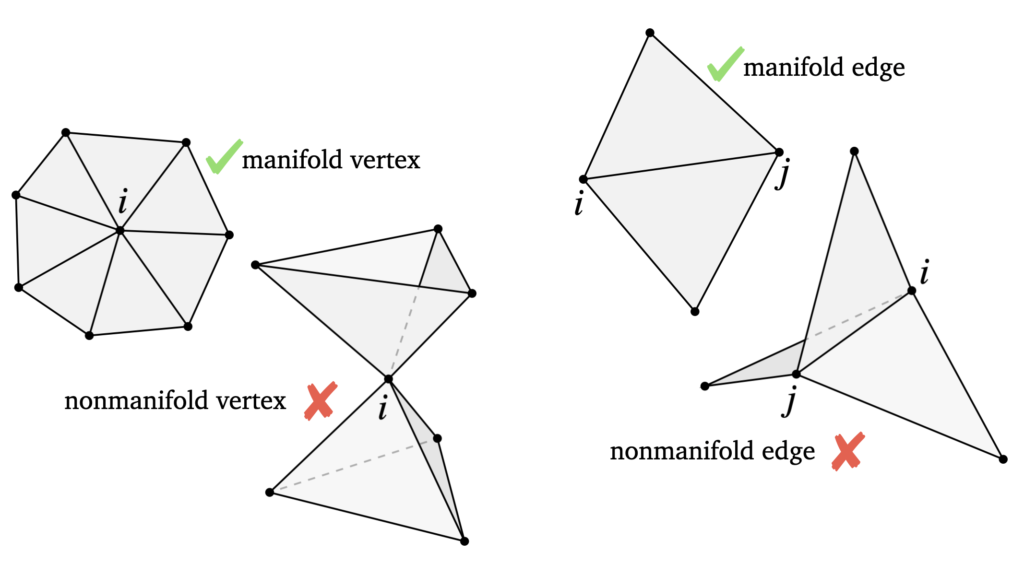

- Dr. Nicholas Sharp, Prof. Etienne Vouga, Josh Vekhter: Nonmanifold Periodic Minimal Surfaces (August 9-August 27)

- Dr. Tal Shnitzer-Dery: Self-similarity loss for shape descriptor learning in correspondence problems (August 9-August 13)

- Dr. Ankita Shukla and Prof. Pavan Turaga: Role of orthogonality in deep representation learning for eco-conservation applications (August 9-August 13)

- Prof. Noah Snavely: Reconstructing the Moon and Earth in 3D from the World’s Photos (August 9-August 13)

- Prof. Justin Solomon: Anisotropic Schrödinger Bridges (August 16-August 27)

- Prof. Marco Tarini: Better Volume-encoded parametrizations (August 2-August 13)

- Prof. Amir Vaxman: High-order directional field design (July 26-August 6)

- Prof. Etienne Vouga: Differentiable Remeshing (July 26-August 6)

- Dr. Stephanie Wang: Discrete Laplacian, area functional, and minimal surfaces (August 16-August 20)

- Paul Zhang: Classifying hexahedral mesh singular vertices (July 26-August 6); Subdivision Surface Mesh Fitting (August 16-August 27)

An intrepid team of TAs helped our participants learn new topics, refined the tutorial activities, and supported the research projects:

- Erik Amezquita, Michigan State University

- Dr. Dena Bazazian, University of Bristol

- Dr. Samir Chowdhury, Stanford University

- Klara Mundilova, MIT

- Nelson Nauata, Simon Fraser University

- Peter Rock, University of Colorado Boulder

- Ritesh Sharma, University of California Merced

- Dr. Antonio Teran-Espinoza, Waymo/Google

- Alberto Tono, Stanford University

- Eric Zhang, Harvard University

Each week of SGI, we had multiple guest speakers drop by to share their research and experiences, and to give advice to the SGI Fellows:

- Prof. Katia Bertoldi, Harvard: Multistable inflatable origami from deployable structures to robots

- Prof. Michael Bronstein, Twitter/Imperial College London: Geometric Deep Learning: the Erlangen Programme of ML

- Prof. Albert Chern, UCSD: Gauge Theory for Vector Field Design

- Prof. Bruce Fischl, Harvard/MGH: Geometry and the Human Brain

- Dr. Fernando de Goes, Pixar: Geometry Processing at Pixar

- Prof. Rana Hanocka, University of Chicago: Deep Learning on Meshes

- Prof. Alexandra Ion, Carnegie-Mellon University: Interactive Structures – Materials that can move, walk, compute

- Prof. Chenfanfu Jiang, UCLA: Developments in smooth optimization contact

- Prof. Theodore Kim, Yale University: Anti-Racist Graphics Research

- Prof. Yaron Lipman, Weizmann Institute: Implicit Neural Representations

- Prof. Mina Konaković Luković, MIT: Transforming Design and Fabrication with Computational Discovery

- Prof. Lakshminarayanan Mahadevan, Harvard: Folding and cutting paper: art and science

- Prof. Caitlin Mueller, MIT: Geometry of High-Performance Architecture

- Prof. Matthias Niessner, TU Munich: Learning to Reconstruct 3D Scenes

- Íñigo Quílez: Intro to SDFs and Examples

- Dr. Elissa Ross, Metafold: Periodic geometry: from art to math and back again

- Dr. Ryan Schmidt, Epic Games and Gradientspace: Geometry Processing in Practice

- Prof. Tamar Shinar, UC Riverside: Partitioned solid-fluid coupling

- Prof. Emily Whiting, Boston University: Mechanics-Based Design for Computational Fabrication

Last but not least, incoming MIT PhD student Leticia Mattos Da Silva organized a talk and panel discussion on the graduate school application process, including a Q&A with Silvia Sellán, Jiayi Eris Zhang, and Oded Stein.

The cast of thousands above is a testament to the dedication of the geometry research community to developing a diverse, energetic community of young researchers.

SGI 2021 comes to a close as quietly as it began, as our Fellows and mentors close one final Zoom call and return to their lives scattered over the globe. In the months and years to come, we look forward to keeping in touch as our Fellows become colleagues, collaborators, and leaders of future generations of researchers.